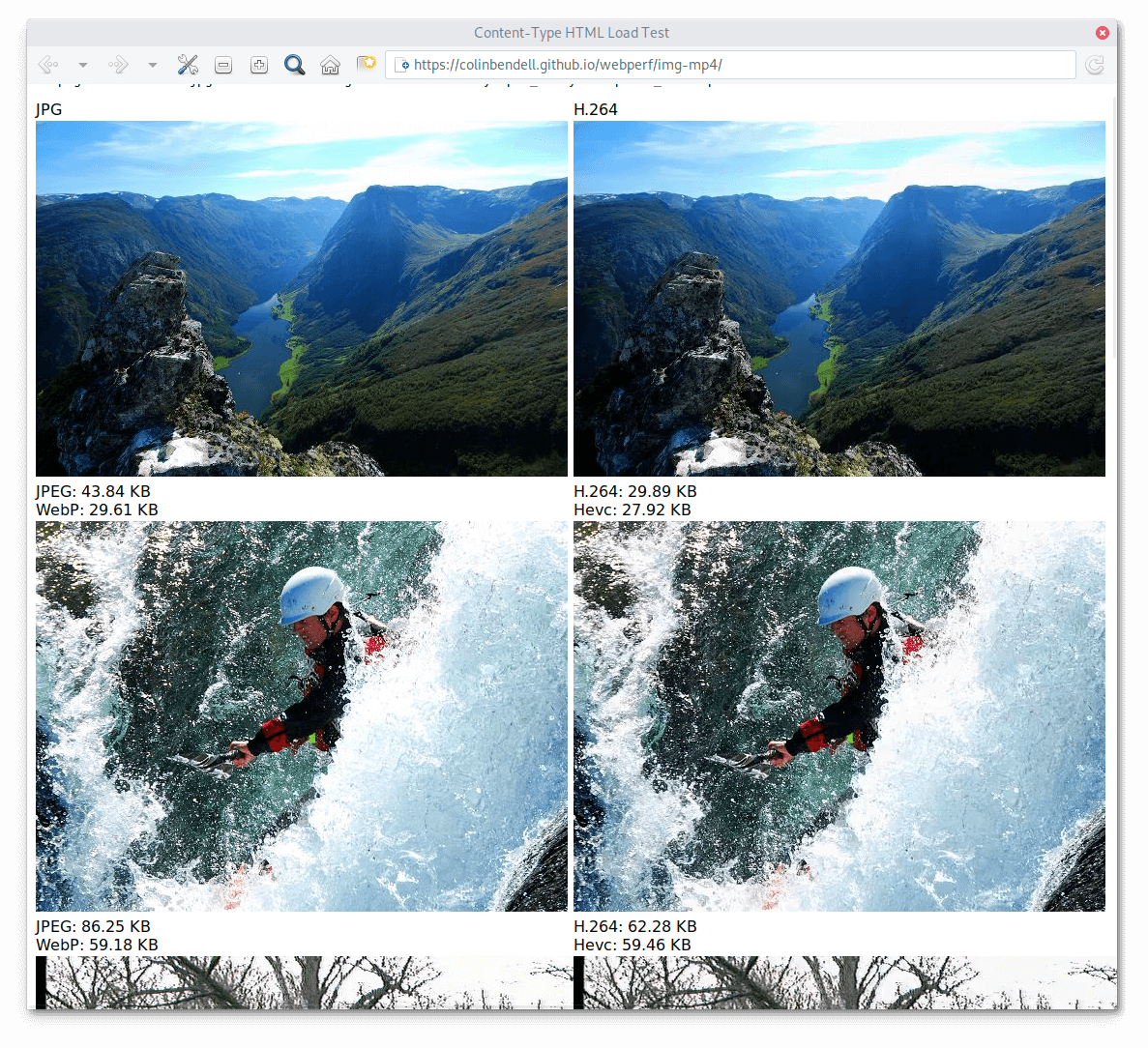

Using videos in the <img> HTML tag can lead to more responsive web-page loads in most cases. Colin Bendell blogged about this topic, make sure to read his post on the cloudinary website. As it turns out, this feature has been supported for more than 2 years in Safari, but only recently the WebKitGTK and WPEWebKit ports caught up. Read on for the crunchy details or skip to the end of the post if you want to try this new feature.

As WebKitGTK and WPEWebKit already heavily use GStreamer for their multimedia backends, it was natural for us to also use GStreamer to provide the video ImageDecoder implementation.

The preliminary step is to hook our new decoder into the MIMETypeRegistry and into the ImageDecoder.cpp platform-agnostic module. This is where the main decoder branches out to platform-specific backends. Then we need to add a new class implementing WebKit’s ImageDecoder virtual interface.

First you need to implement supportsMediaType(). For this method we already had all the code in place. WebKit scans the GStreamer plugin registry and depending on the plugins available on the target platform, a mime-type cache is built, by the RegistryScanner. Our new image decoder just needs to hook into this component (exposed as singleton) so that we can be sure that the decoder will be used only for media types supported by GStreamer.

The second most important places of the decoder are its constructor and the setData() method. This is the place where the decoder receives encoded data, as a SharedBuffer, and performs the decoding. Because this method is synchronously called from a secondary thread, we run the GStreamer pipeline there, until the video has been decoded entirely. Our pipeline relies on the GStreamer decodebin element and WebKit’s internal video sink. For the time being hardware-accelerated decoding is not supported. We should soon be able to fix this issue though. Once all samples have been received by the sink, the decoder notifies its caller using a callback. The caller then knows it can request decoded frames.

Last, the decoder needs to provide decoded frames! This is implemented using the createFrameImageAtIndex() method. Our decoder implementation keeps an internal Map of the decoded samples. We sub-classed the MediaSampleGStreamer to provide an image() method, which returns the Cairo surface representing the decoded frame. Again, here we don’t support GL textures yet. Some more infrastructure work is likely going to be needed in that area of our WebKit ports.

Our implementation of the video ImageDecoder lives in ImageDecoderGStreamer which will be shipped in WebKitGTK and WPEWebKit 2.30, around September/October. But what if you want to try this already? Well, building WebKit can be a tedious task. We’ve been hard a work at Igalia to make this a bit easier using a new Flatpak SDK. So, you can either try this feature in Epiphany Tech Preview or (surprise!) with our new tooling allowing to download and run nightly binaries from the upstream WebKit build bots:

$ wget https://raw.githubusercontent.com/WebKit/webkit/master/Tools/Scripts/webkit-flatpak-run-nightly $ chmod +x webkit-flatpak-run-nightly $ python3 webkit-flatpak-run-nightly MiniBrowser https://colinbendell.github.io/webperf/img-mp4/

This script locally installs our new Flatpak-based developer SDK in ~/.cache/wk-nightly and then downloads a zip archive of the build artefacts from servers recently brought up by my colleague Carlos Alberto Lopez Perez, many thanks to him :). The downloaded zip file is unpacked in /tmp and kept around in case you want to run this again without re-downloading the build archive. Flatpak is then used to run the binaries inside a sandbox! This is a nice way to run the bleeding edge of the web-engine, without having to build it or install any distro package.

Implementing new features in WebKit is one of the many expertize domains we are involved in at Igalia. Our multimedia team is always on the lookout to help folks in their projects involving either GStreamer or WebKit or both! Don’t hesitate to reach out.